Image analysis is ubiquitous in contemporary technology: from medical diagnostics to autonomous vehicles to facial recognition. Computers that use deep-learning convolutional neural networks—layers of algorithms that process images—have revolutionized computer vision.

But convolutional neural networks, or CNNs, classify images by learning from prior-trained data, often memorizing or developing stereotypes. They are also vulnerable to adversarial attacks that come in the form of small, almost-imperceptible distortions in the image that lead to bad decisions. These drawbacks limit the usefulness of CNNs. Additionally, there is growing awareness of the exorbitant carbon footprint associated with deep learning algorithms like CNNs.

One way to improve the energy efficiency and reliability of image processing algorithms involves combining conventional computer vision with optical preprocessors. Such hybrid systems work with minimal electronic hardware. Since light completes mathematical functions without dissipating energy in the preprocessing stage, significant time and energy savings can be achieved with hybrid computer vision systems. This emerging approach may overcome the shortcomings of deep learning and exploit the advantages of both optics and electronics.

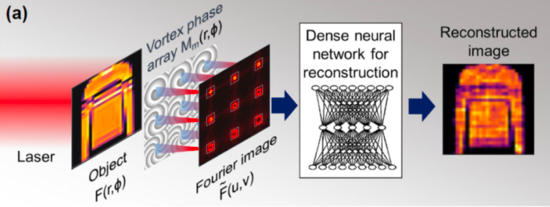

In a recent paper published in Optica, UC Riverside mechanical engineering professor Luat Vuong and doctoral student Baurzhan Muminov demonstrate viability for hybrid computer vision systems via application of optical vortices, swirling waves of light with a dark central spot. Vortices can be likened to hydrodynamic whirlpools that are created when light travels around edges and corners.

Knowledge of vortices can be extended to understand arbitrary wave patterns. When imprinted with vortices, optical image data travels in a manner that highlights and mixes different parts of the optical image. Muminov and Vuong show that vortex image preprocessing with shallow, “small-brain” neural networks, which have only a few layers of algorithms to run through, may function in lieu of CNNs.

“The unique advantage of optical vortices lies in their mathematical, edge-enhancing function,” said Vuong. “In this paper, we show that the optical vortex encoder generates object intensity data in such a manner that a small brain neural network can rapidly reconstruct an original image from its optically preprocessed pattern.”

Optical preprocessing flattens the power consumption of image calculations, while the digital signaling in electronics identifies correlations, provides optimizations, and rapidly calculates reliable decision-making thresholds. With hybrid computer vision, optics offers the advantages of speed and low-power computing and a reduction in the time cost of CNNs by 2 orders of magnitude. Through image compression, it is possible to significantly reduce the electronic back-end hardware—both in terms of memory and computational complexity.

“Our demonstration with vortex encoders shows that optical preprocessing may obviate the need for CNNs, be more robust than CNNs, and have the capacity to generalize solutions to inverse problems, unlike CNNs,” said Vuong. “For example, we show that, when a hybrid neural network learns the shape of handwritten digits, it can subsequently reconstruct Arabic or Japanese characters that it hasn’t seen before.”

Vuong and Muminov’s paper also shows that the reduction of an image into fewer, high-intensity pixels is capable of extremely low-light image processing. The research offers new insights for the role of photonics in building generalizable small-brain hybrid neural networks and developing real-time hardware for big-data analytics.

The open-access article, "Fourier optical preprocessing in lieu of deep learning," is available here.

Header photo: Original images on left are reconstructed without optical encoding (center) and with optical encoding (right). (Muminov & Vuong, 2020)